About Me

I am a Postdoc Researcher at TU/e AMOR/e Lab, working with Prof. Joaquin Vanschoren on LLMs and MLLMs. Previously I was an ELLIS Ph.D. candidate at VISLAB, University of Amsterdam (UvA), supervised by Prof. Efstratios Gavves and Prof. Jan-Jakob Sonke. I did a fantastic internship at Meshcapade, advised by Dr. Yan Zhang, Dr. Yu Sun, and Prof. Michael Black. I also worked closely with Prof. Pan Zhou.

I serve as a regular reviewer for CVPR, ICCV, ECCV, NeurIPS, ICLR, ICML, T-PAMI and IJCV.

Research Interests

My research aims to develop human-centered AI that augment human capabilities in perception, reasoning, and interaction with the world. To achieve this, I am focusing on the following topics:

• Generalizable Perception: interactive segmentation, few-shot segmentation, 3D scene understanding

• Foundation Models: LLMs and MLLMs reasoning, vision-language models, multi-modal learning

• Embodied Agents: human-scene interaction, 3D digital humans, multi-agent cooperation

Hobbies

I'm a sports enthusiast with a passion for snowboarding and badminton. I also hold a 2nd-degree black belt in Taekwondo. Whether on the slopes, the court, or in training, I love pushing my limits and shaping my skills.

News

- [Nov 2025] I started my postdoc at TU/e, working with Prof. Joaquin Vanschoren on LLMs and MLLMs.

- [June 2025] One paper on vision-language models was accepted in ICCV 2025, see you in Hawaii!

- [June 2025] I started my internship at Meshcapade and work with Dr. Yan Zhang on motion generation.

- [May 2025] Our work NPISeg3D on Interactive 3D Segmentation was accepted in ICML2025! See you in Vancouver!

- [Jan 2025] CaPo, our first work on Embodied Agents, was accepted in ICLR2025, see you in Singapore.

- [Sept 2024] I am visiting Prof. Pan Zhou in Singapore.

- [July 2024] We present CPlot for Interactive Segmentation in ECCV2024, see you in Milano.

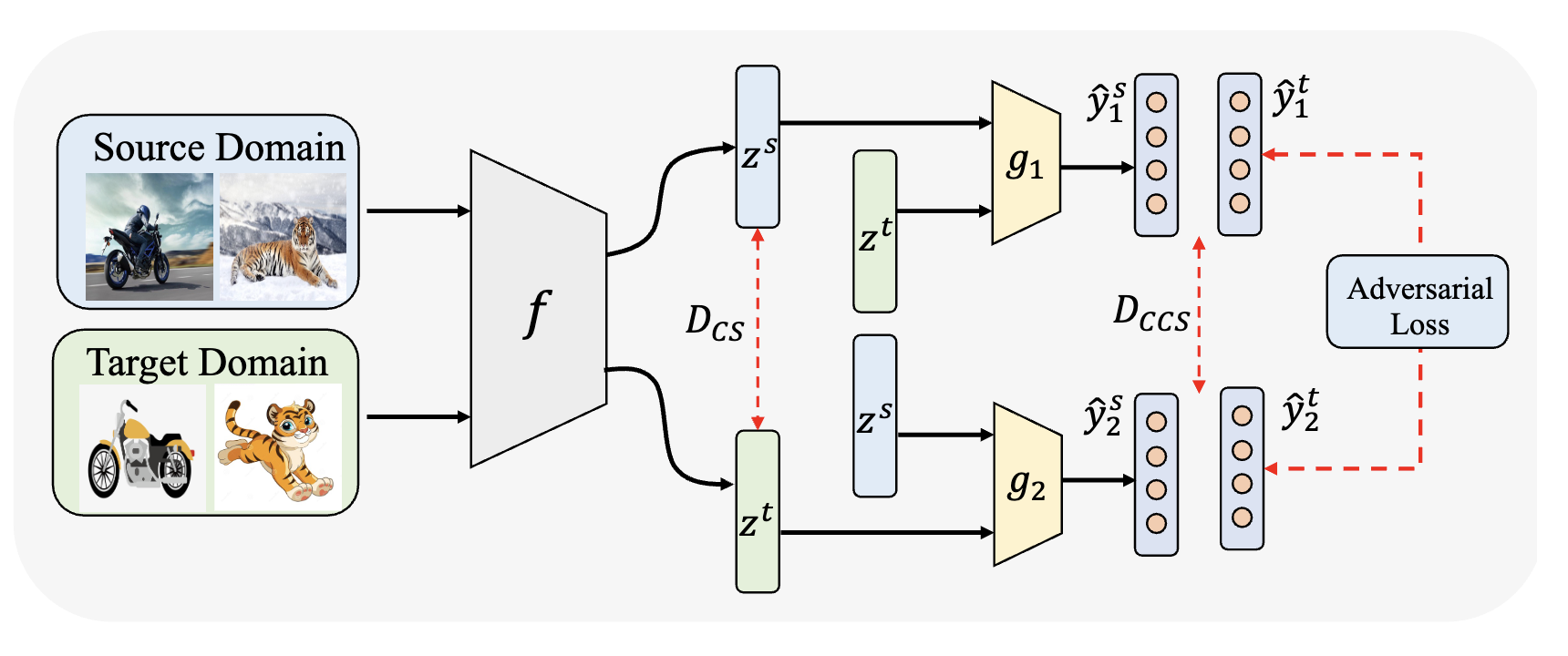

- [June 2024] One paper with Wenzhe Yin on Domain Adaptation was accepted in UAI 2024, see you in Barcelona.

- [Sept 2023] We present prototype adaption for Few-shot point cloud segmentation in 3DV2024, see you in Davos.

- [Sept 2022] Our work on Few-shot Segmentation with Graph Convolution Network was accepted to BMVC2022.

- [June 2022] One paper with Haochen Wang on Few-shot Segmentation was accepted to ACM MM 2022.

- [March 2022] Our work on Few-shot Segmentation with Prototype Convolution was accepted to CVPR 2022.

- [Sept 2021] I joined VISLAB as a PhD candidate.

Recent Projects

-

Probabilistic Prototype Calibration of Vision-language Models for Generalized Few-shot Semantic Segmentation

Probabilistic Prototype Calibration of Vision-language Models for Generalized Few-shot Semantic Segmentation

Jie Liu, Jiayi Shen, Pan Zhou, Jan-Jakob Sonke, Efstratios Gavves

International Conference on Computer Vision (ICCV), 2025

A probabilistic prototype calibration method of vision-language models for generalized few-shot segmentation to improve model generalization without forgetting.

[Paper] [Code] -

NPISeg3D: Probabilistic Interactive 3D Segmentation with Hierarchical Neural Processes

NPISeg3D: Probabilistic Interactive 3D Segmentation with Hierarchical Neural Processes

Jie Liu, Pan Zhou, Zehao Xiao, Jiayi Shen, Wenzhe Yin, Jan-Jakob Sonke, Efstratios Gavves

International Conference on Machine Learning (ICML), 2025

A probabilistic method for interactive 3D segmentation to facilitate uncertainty estimation and few-shot generalization.

[Project] [Paper] [Code] -

CaPo: Cooperative Plan Optimization for Efficient Embodied Multi-agent Cooperation

CaPo: Cooperative Plan Optimization for Efficient Embodied Multi-agent Cooperation

Jie Liu, Pan Zhou, Yingjun Du, Ah-Hwee Tan, Cees GM Snoek, Jan-Jakob Sonke, Efstratios Gavves

International Conference on Learning Representations (ICLR), 2025

An efficient plan optimization method with LLMs for embodied multi-agent cooperation.

[Paper] [Code] -

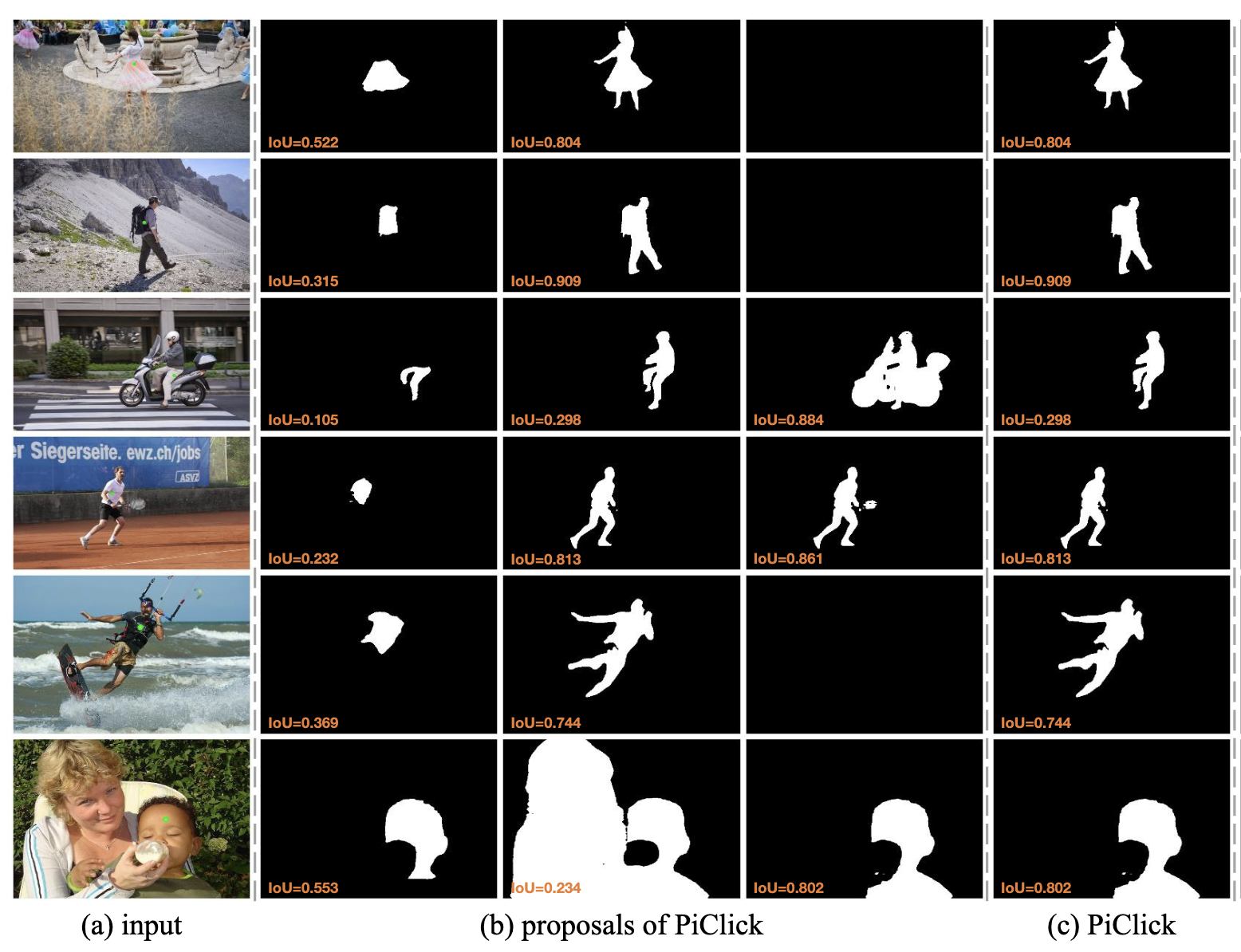

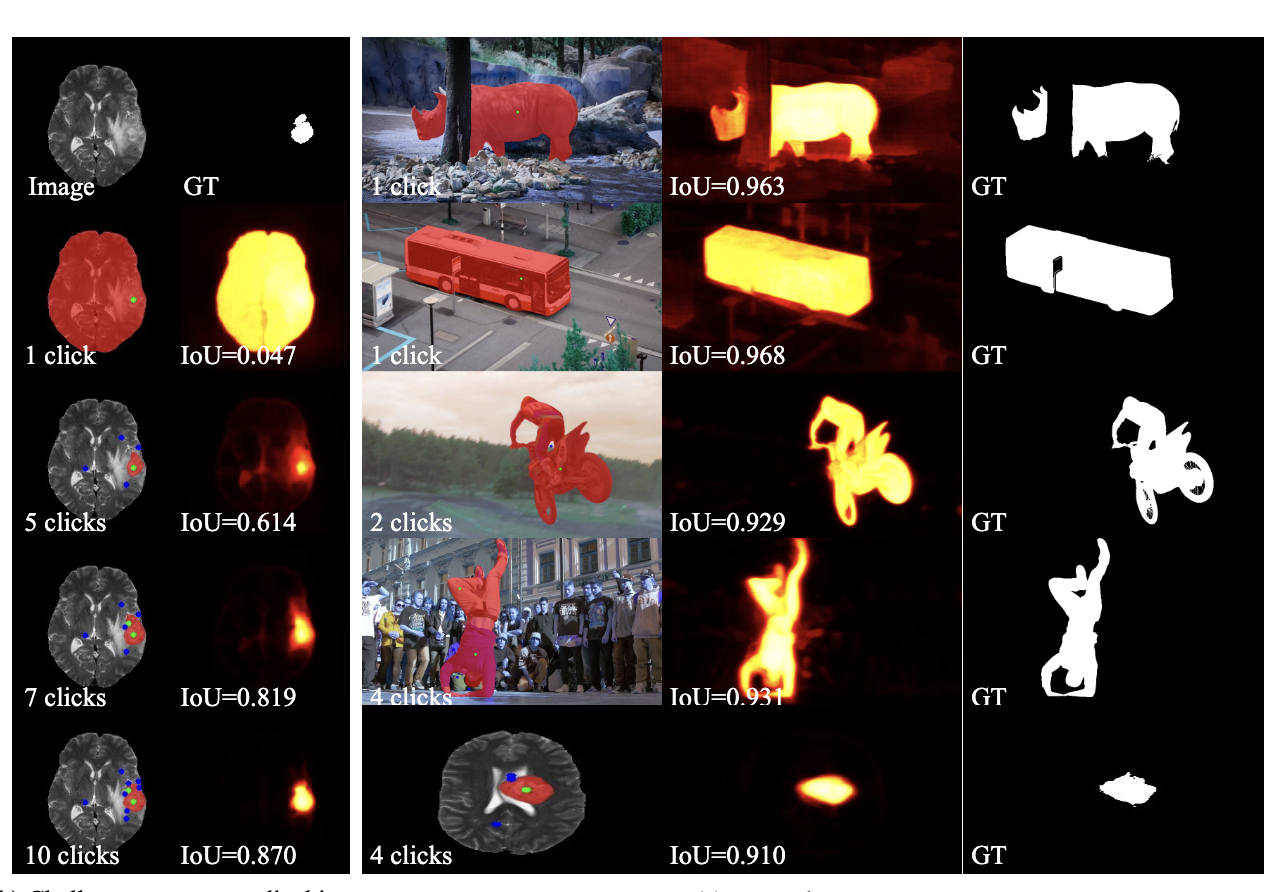

CPlot: Click Prompt Learning with Optimal Transport for Interactive Segmentation

CPlot: Click Prompt Learning with Optimal Transport for Interactive Segmentation

Jie Liu, Haochen Wang, Wenzhe Yin, Jan-Jakob Sonke, Efstratios Gavves

European Conference on Computer Vision (ECCV), 2024

A click prompt learning method with optimal transport for interactive segmentation to improve interaction efficiency.

[Project] [Paper] [Code] -

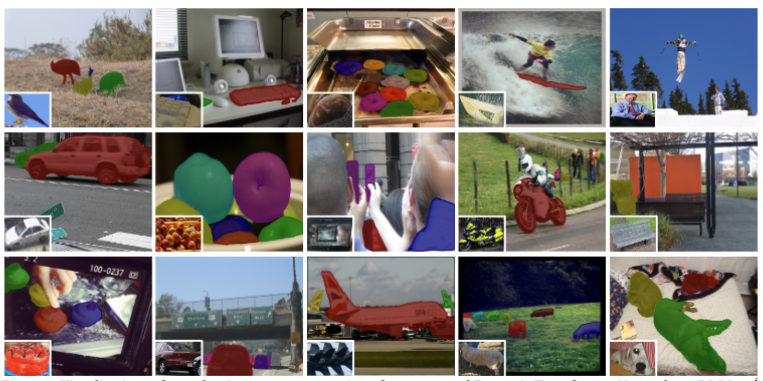

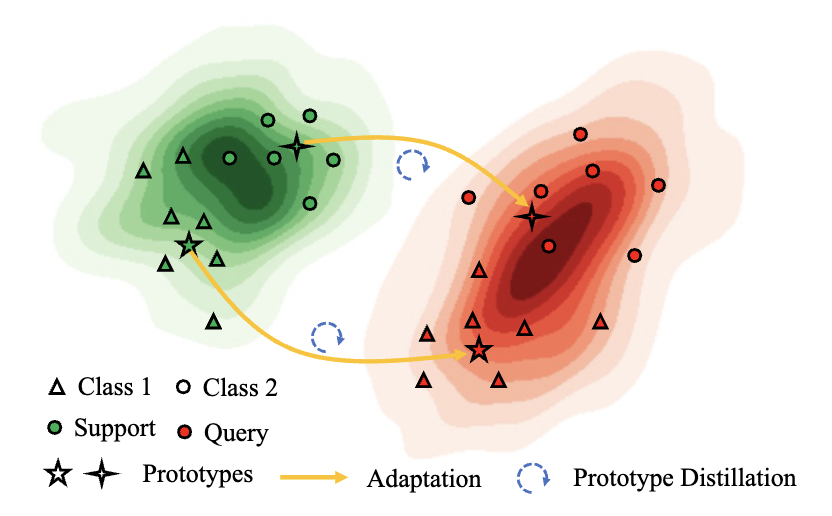

Dynamic Prototype Adaptation with Distillation for Few-shot Point Cloud Segmentation

Dynamic Prototype Adaptation with Distillation for Few-shot Point Cloud Segmentation

Jie Liu, Wenzhe Yin, Haochen Wang, Yunlu Chen, Jan-Jakob Sonke, Efstratios Gavves

International Conference on 3D Vision (3DV), 2024

A prototype adaptation method for few-shot point cloud segmentation.

[Paper] [Code] -

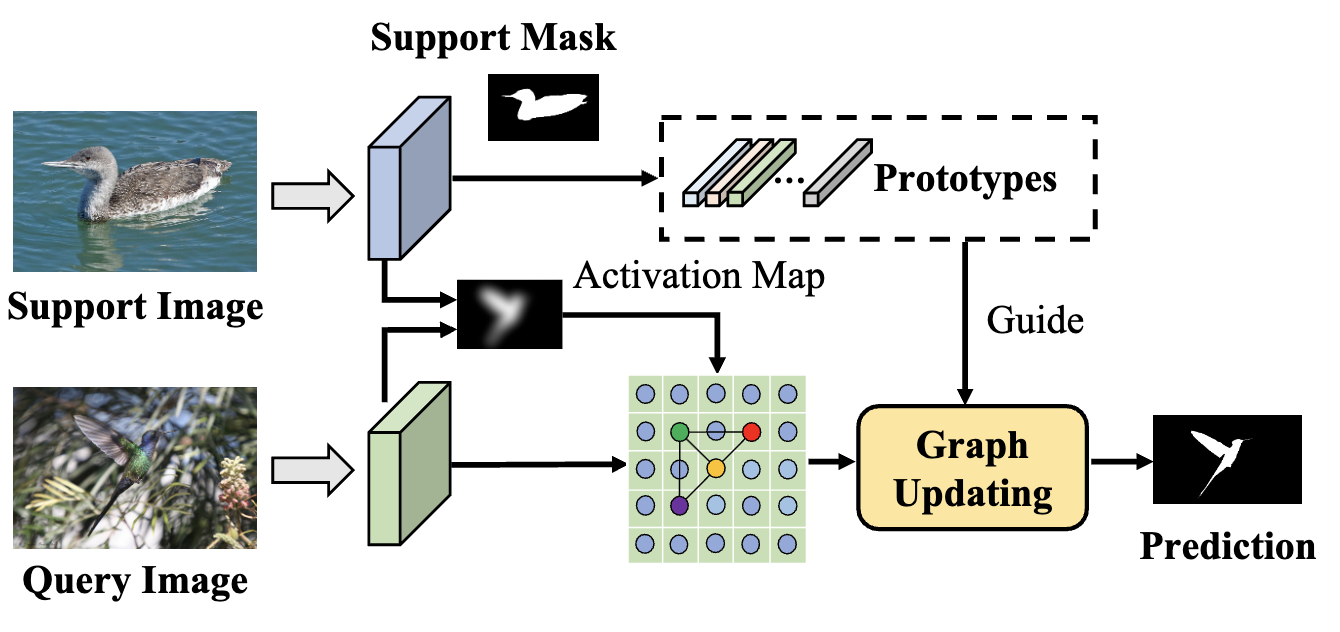

Few-shot Semantic Segmentation with Support-Induced Graph Convolutional Network

Few-shot Semantic Segmentation with Support-Induced Graph Convolutional Network

Jie Liu, Yanqi Bao, Haochen Wang, Wenzhe Yin, Jan-Jakob Sonke, Efstratios Gavves

British Machine Vision Conference (BMVC), 2022

A graph convolutional network for few-shot semantic segmentation with support-induced structure.

[Paper] [Code] -

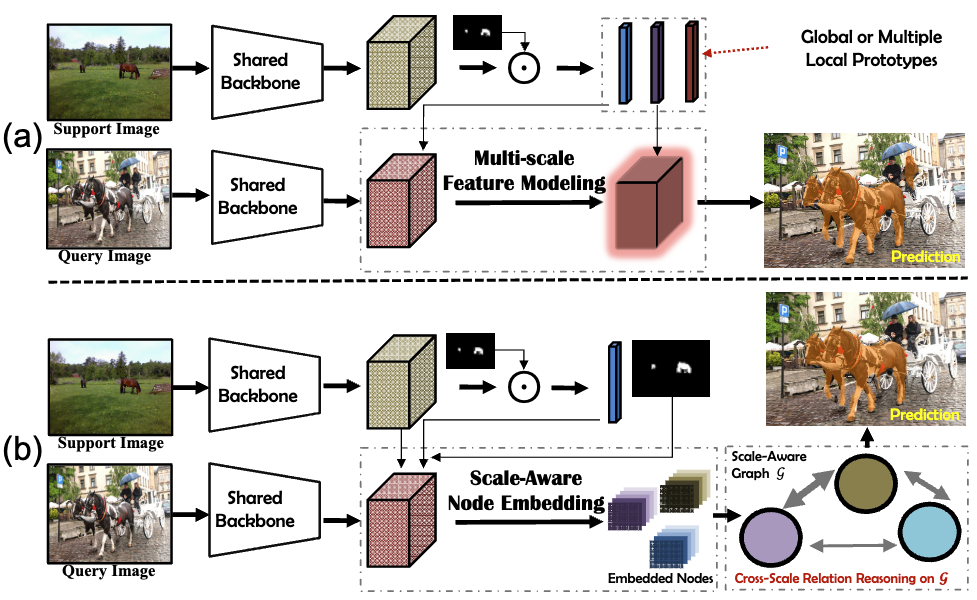

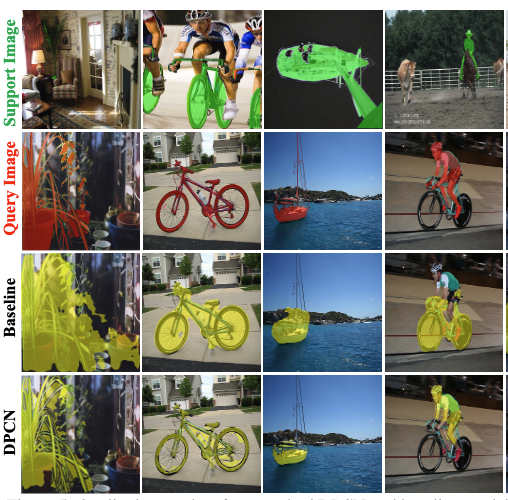

Dynamic Prototype Convolution Network for Few-shot Semantic Segmentation

Dynamic Prototype Convolution Network for Few-shot Semantic Segmentation

Jie Liu, Yanqi Bao, Guosen Xie, Huan Xiong, Jan-Jakob Sonke, E Gavves

Conference on Computer Vision and Pattern Recognition (CVPR), 2022

A dynamic prototype convolution network for few-shot semantic segmentation to improve model generalization.

[Paper] [Code]